Net zero principles are being applied to this real world Canada Water project

Architects often feel like the captain of the ship in a design team – coordinating the geometry, structure and services of a building is complex, and requires input from everyone in the project team. This can feel particularly challenging when approaches, remits, scope and ways of thinking are different in various disciplines across the design team.

The reframing of building performance to focus on carbon over the past few years has, we think, given teams a renewed common purpose; a single factor by which to measure the effectiveness of collective decisions.

Institutional and collaborative industry efforts to codify this focus have helped create impetus: the RIBA 2030 Challenge and LETI’s Climate Emergency Design Guide in particular. Upcoming industry-wide collaborations – such as the Net Zero Carbon Buildings Standard – will, hopefully, refine the upper limits of our building carbon budgets.

While having international clients across a wide range of sectors, the majority of Allford Hall Monaghan Morris’s (AHMM’s) work is in London. It is often mixed use, on tight urban sites, large scale and commercially driven. The practice has a history of collaborative working at projects such as at the White Collar Factory, where the building works as a holistic system thanks to the close partnership with the structural and MEP engineers.

In 2018 and 2019, when much of the above guidance was beginning to emerge, it became clear that it was not really reflective of the kind and scale of project on which we work. It did reflect the constraints and opportunities in our projects. We decided to do something about that, and set up a collaboration with University College London’s (UCL’s) Institute of Environmental Engineering.

We have collaborated with UCL in the past on post-occupancy evaluation, which won the CIBSE Barker Silver Medal, but this was a bigger commitment – two years to take a deep dive into the most pressing issue facing our industry and to propose new ways of working.

The project, which included workshops featuring industry consultants and AHMM architects, took the form of a Knowledge Transfer Partnership, designed to benefit research institutions, businesses and researchers. The output of the research, funded by AHMM and Innovate UK, seeks to understand the opportunities and challenges of designing, building, occupying and maintaining large-scale, urban, mixed-use developments. A series of workshops was held to ascertain the obstacles and opportunities to achieving net zero carbon developments.

Net Zero Carbon Guide

The guide works in four parts. It is structured to take the reader from a ‘carbon zero’ to ‘low emissions hero’. It is also set up as an interactive digital document that can be dipped into at any given point.

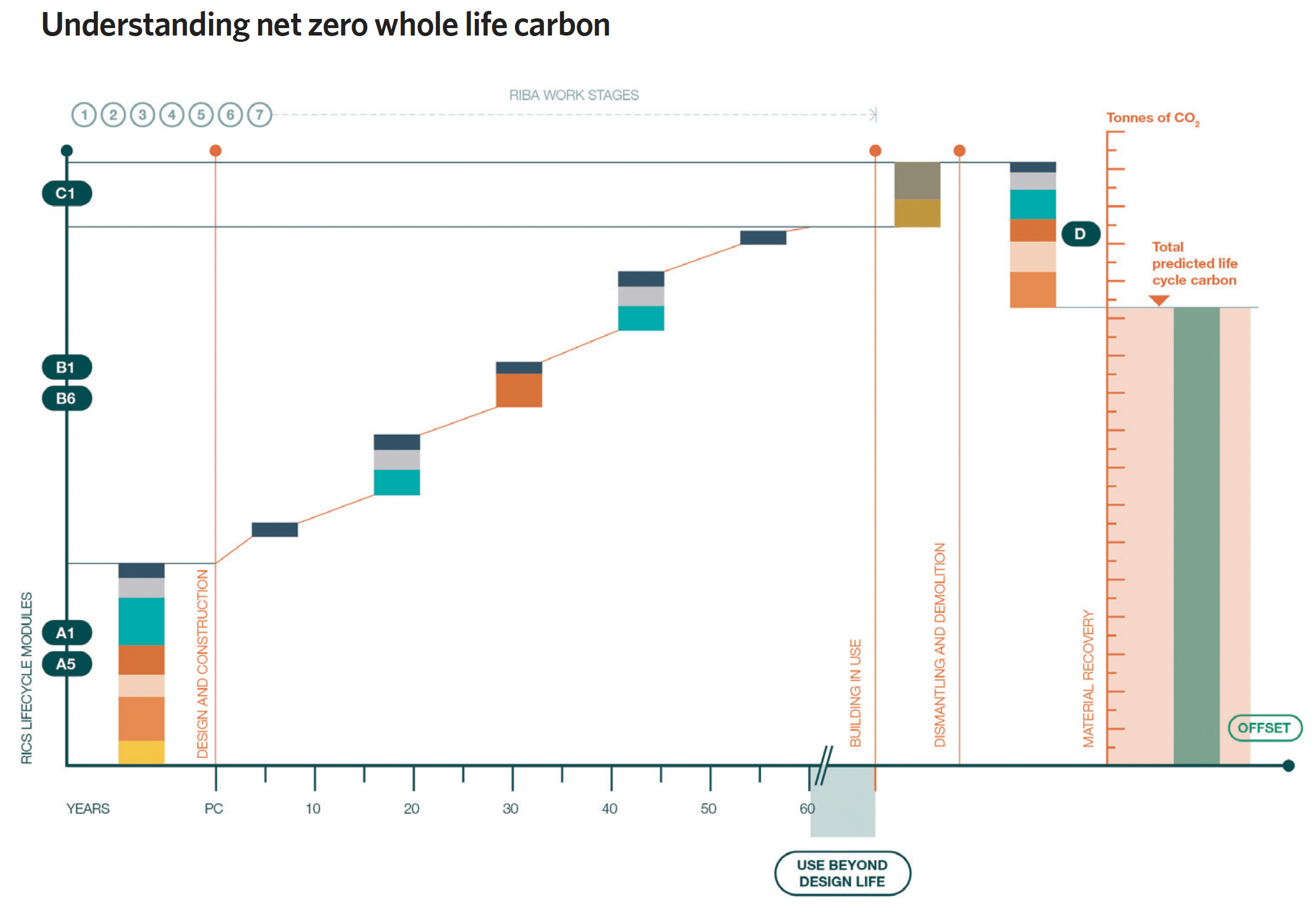

The guidance defines the criteria we and the wider industry use to assess the journey to net zero, drawing upon existing industry guidance and methodologies such as RICS’ Whole life carbon assessment for the built environment. This is taken further to examine specific parts of the definition of ‘net zero carbon’, looking into the component parts – ‘up front’, ‘operational’ and ‘lifetime’ carbon, as well as offsets.

We understand the influence architects have over each part and how, with wider collaboration in the design team, they can be optimised as a system. We then assemble these components into a life-cycle visualisation that we believe will help design teams to interrogate decisions and understand lifetime implications of materiality, construction and systems management.

Part Two outlines the key considerations and changing context that teams must review continuously. We have identified the risk of an embodied carbon ‘performance gap’, much like the long-understood operational gap. We contextualise the building life-cycle calculation in wider energy systems, including decarbonisation of the Grid.

The guide describes two strategies for delivering net zero carbon – ‘net zero now’ and the ‘planned pathways approach’ – drawing out the implications of each, and describing where they might be appropriate. We then propose a new way of thinking about projects, by changing the ‘cost, quality, programme’ triangle to a square, including ‘carbon’ as an equal driver of decision-making.

The third section reviews and links to key resources to tool up a team, including international standards and other organisations’ guidance.

The final section offers a case study – Plot F at Canada Water in south London. This high-density, mixed-use development was designed for British Land and received planning permission in summer of 2022. The client established net zero as an aspiration, and the design process and collaborative effort of the team – including Sweco as MEP engineers and AKT II as structural consultants – have provided an excellent opportunity to explore what adopting net zero carbon principles means in practice and forms the basis of our guidance for future projects.

Key lessons

The key lessons from the case study set guidelines for future projects. The first is the need to integrate iterative performance-based modelling across the design team. This means architects becoming more numerate and evidence-based in their decision-making and engineers becoming more comfortable with an iterative design process.

Second, project teams need to set clear and ambitious targets. In the case study, these were broken down into primary and secondary values for the whole building and individual components. This approach allowed for discrete studies to be carried out by individual consultants before these were pulled together in a combined, whole building model. Third, consultant appointments must recognise the significant shift in working practice that zero carbon buildings need. This should be reflected in scope, time and, ultimately, fees.

Fourth, clear communication of ambition is essential from the outset of a project. Continued communication of targets and assimilation of analytical outcomes are needed to track the journey to zero carbon. Fifth, early contractor engagement can bring new options to the design process and opportunities for subcontractor engagement. Sixth, team coordination is more important than ever.

It was necessary for each consultant to bring their own analysis skills to the embodied carbon calculations. It was also important that this analysis could be brought together in the whole life model to chart progress of the design against carbon reduction targets.

Seventh, and finally, the capability of the design team to support the client aspiration was essential for delivering carbon reductions on this project. This may require wider upskilling of the industry to deliver zero carbon buildings as a matter of course.

The AHMM/UCL IEDE guide is available to download and is intended as a live guidance document. It will be subject to ongoing critical review, and the next release will feature a calculation spreadsheet to help others in industry deliver net zero carbon buildings.

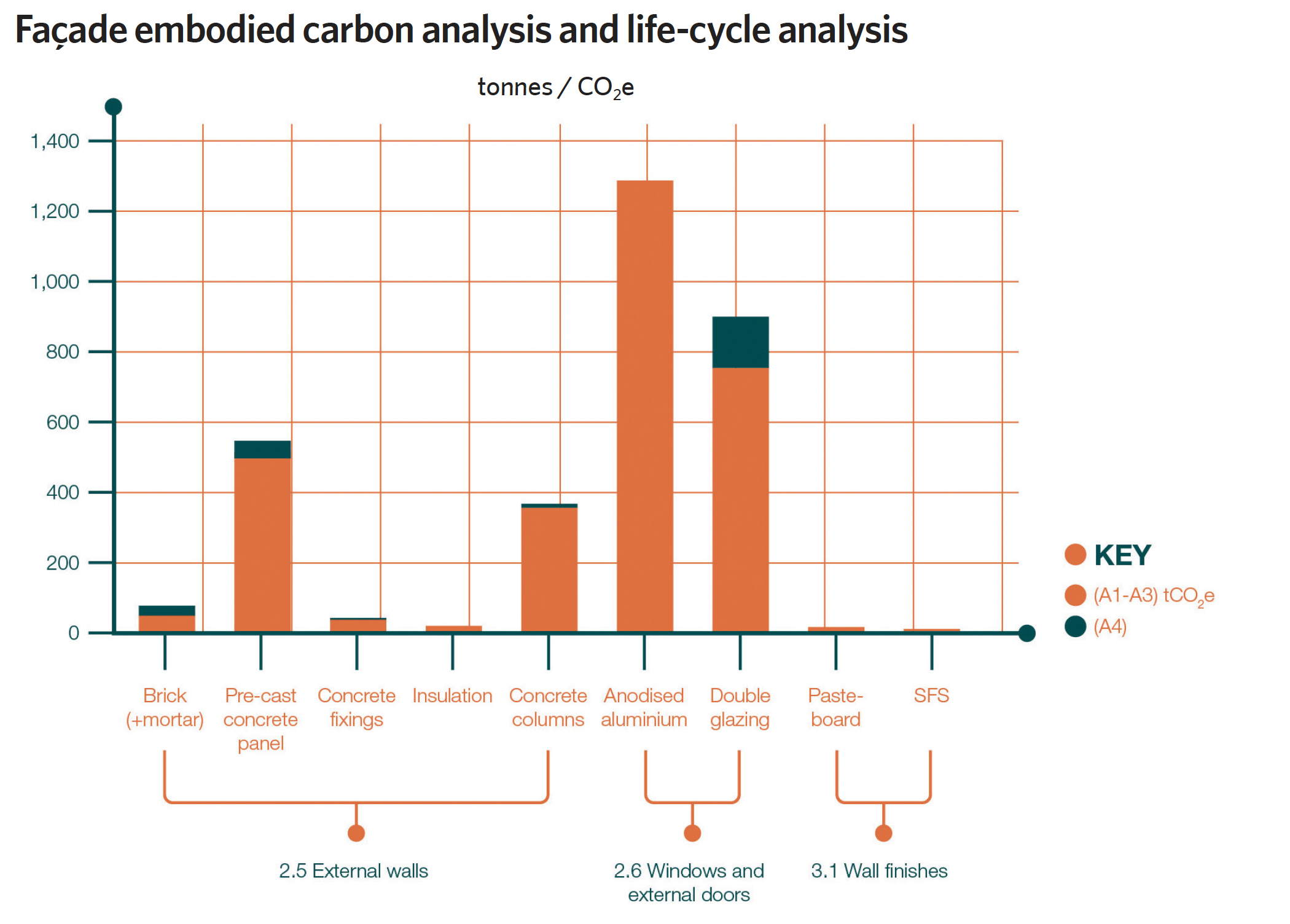

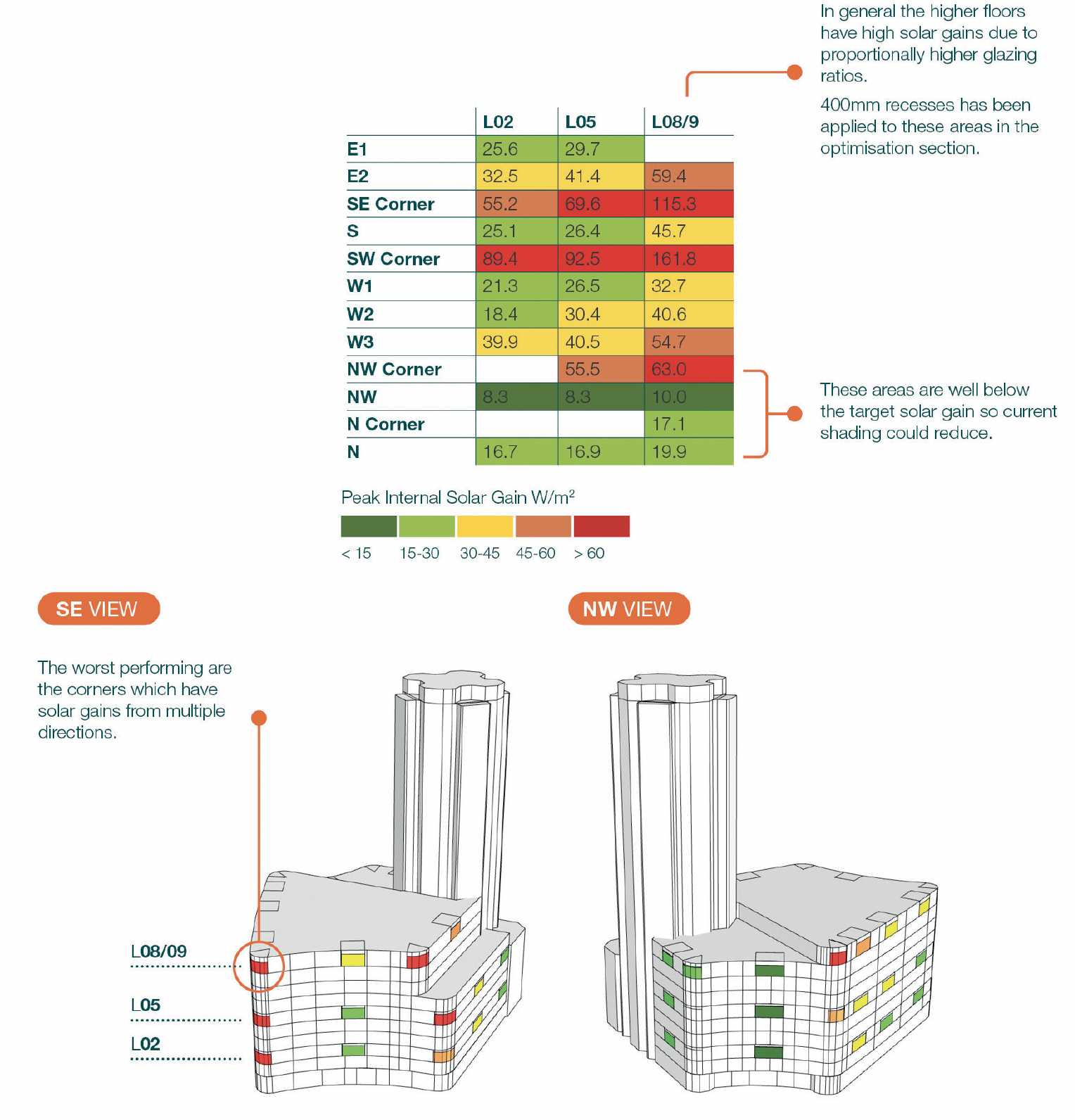

Parametric solar gain analysis at Canada Water: The solar radiation analysis shows the peak international gains for the base case façade of the commercial development (without the second tower). The areas with the highest gains require additional shading, window recesses, and the use of glass with lower G-values, which would reduce heat gain. All areas in green appear to meet the target solar gain

Canada Water net zero carbon case study

Canada Water Plot F includes a significant office building of 38,925m2 and 410 residential units in two towers. The towers are positioned at 45 degrees to one another, to respond to the

street pattern.

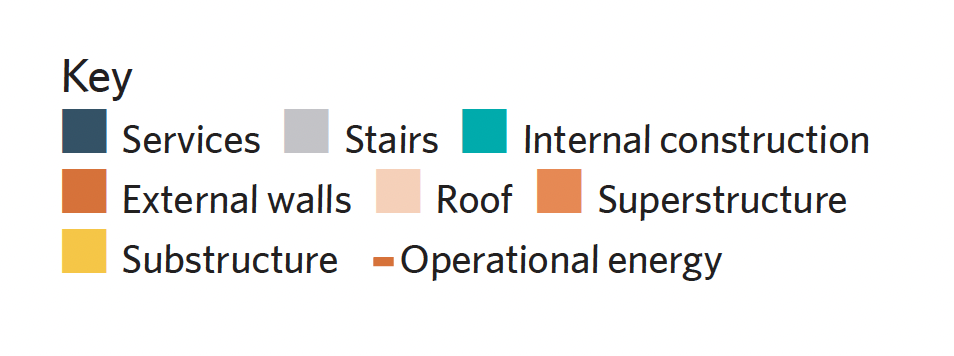

The client’s ambition to be net zero in use was developed into primary numerical targets based on industry benchmarks for achieving the aspirations of the scheme, and included specific embodied energy and operational targets. In this case, 90kWh·m-2 per year operational energy (aligned with the UK Green Building Council’s targets, and recognising the likely landlord-tenant split of 55kWh·m-2 per year base build and 35kWh·m-2 per year tenant use) and 500kgCO2/m2 embodied carbon for the office; secondary targets supporting the delivery of the primary targets were then informed by team experience and other industry benchmarks, notably LETI proportional breakdowns of total embodied carbon numbers. For example, 16% of the overall carbon number allocated to façades gave the architectural team a component-specific embodied carbon target to inform design development.

Façade development

The façade of the buildings represented a key intersection between architecture, structure and MEP engineering. The design of the skin of the building, therefore, illustrates the iterative and collaborative nature of delivering zero carbon buildings.

To achieve very low operational energy levels, the MEP engineer set very low upper limits for perimeter solar gains entering the office spaces. This set a challenge for the architects to balance daylight, gains, architectural expression and embodied carbon. So, the first piece of analysis was a simple headline exploration of the gains on the office façades (see figure above). This developed into more detailed and iterative explorations of the façade geometry: parametric analysis of the façades (that also allowed embodied carbon to be assessed simultaneously with operational energy) and CIBSE TM59 assessments of the residential façade proposals.

The parametric analysis (above) enabled conversations between architects, engineers, the client and planners, while using carbon and energy performance as the driving metric to assess design development.

The whole life carbon assessments for the project revealed that upfront embodied carbon emissions would be as important as operational energy emissions over the life of the building. Simultaneous to the parametric development of the façade geometry, the design team was working on optimising embodied carbon. The structural engineer examined various structural options, providing data to compare each option against the secondary carbon targets while the architect visualised the formal and aesthetic implications.

As the architecture evolved, the team developed a hybrid ventilation approach to the perimeter zones of the office building. This reinforced the need for low solar gains and introduced opening vents. The team examined the façade from an upfront embodied carbon point of view, as well as whole life emissions. This dual analysis provided an elemental breakdown of the carbon intensity of a façade bay and informed the design for repair, maintenance and replacement throughout the life of the building.

The results highlighted that, for example, the commercial building’s façade had embodied carbon of 88.5kgCO2e/m2 based on RICS A1-A3. This value was almost double the LETI benchmarks for the RIBA 2030 target, which is estimated to be 46.8kgCO2e/m2. Putting this value into a cumulative whole building value allowed the team to use the low structural value to ‘compensate’ for a higher façade value.

The case study takes the reader from first principles to examining more nuanced decision-making, identifying some of the trade-offs required to drive down whole life carbon on a live commercial project.

The lessons point to a way of working that is much more collaborative and a process that is more iterative in all disciplines – particularly engineering, where consultants are perhaps not as accustomed to the trial and error of a design development process that is not always linear.

About the author

Dr Craig Robertson is head of sustainability and building performance and Dr Simon Hatherly is senior building performance architect at AHMM